|

I am a second-year CS Ph.D. student focusing on 3D computer vision at Michigan State University (MSU), where my advisor is Prof. Xiaoming Liu. Before that, I graduated from Xi’an Jiaotong University (XJTU) with a Bachelor & Master degree in Computer Science. Prior to joining MSU, I interned at University of Illinois Urabana-Champaign (UIUC) with Prof. Yu-Xiong Wang. Email / LinkedIn / Google Scholar / Twitter (X) / Github / |

|

|

My research interests lie at the intersection of multi-modal learning and computer vision with the long-term goal of empowering computational models to better perceive and interact with the 3D visual world. Currently, I'm working on:

|

|

|

|

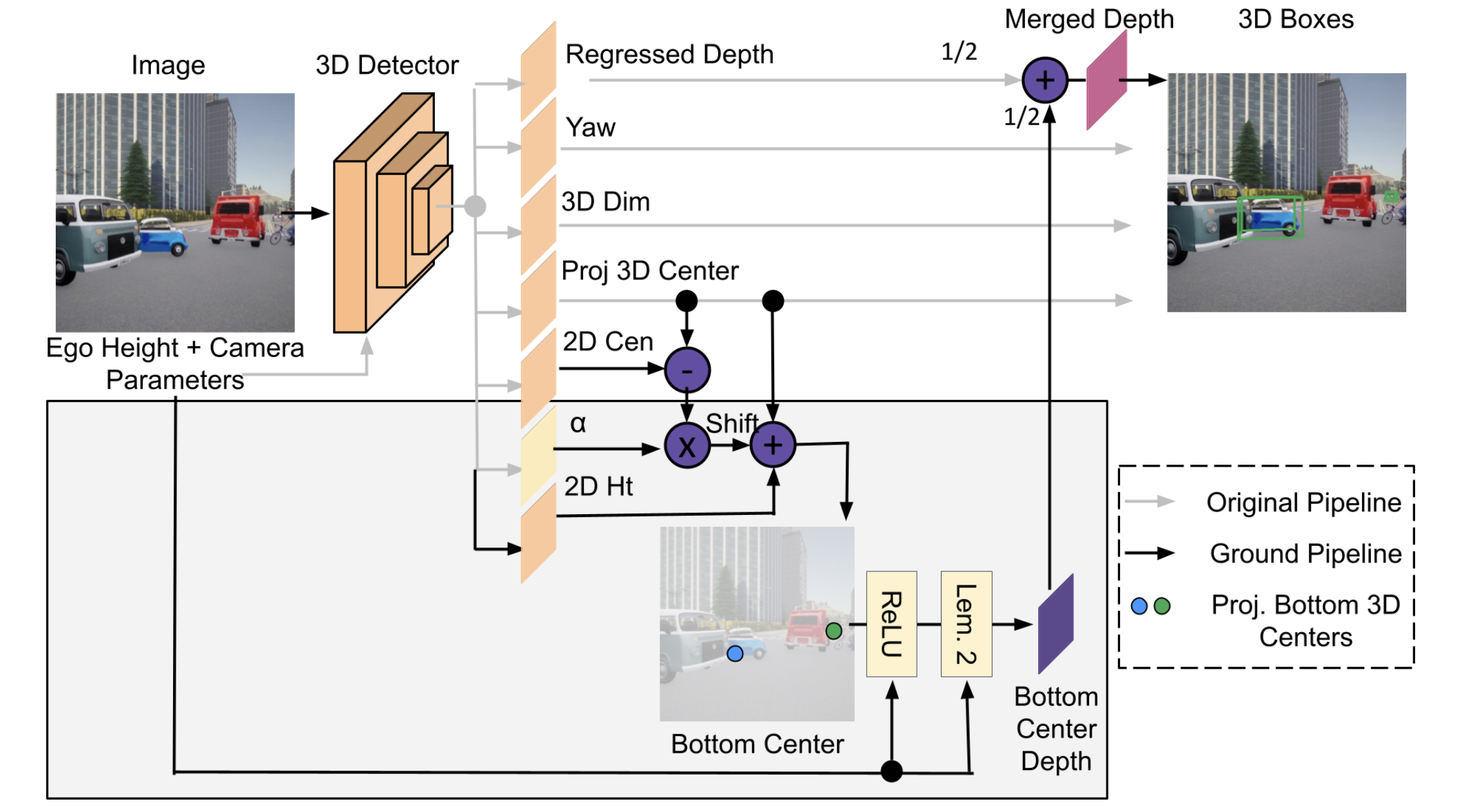

Abhinav Kumar, Yuliang Guo, Zhihao Zhang, Xinyu Huang, Liu Ren, Xiaoming Liu ICCV , 2025 Project Page / Code / arXiv Highlights the understudied problem of Mono3D generalization to unseen ego heights. |

|

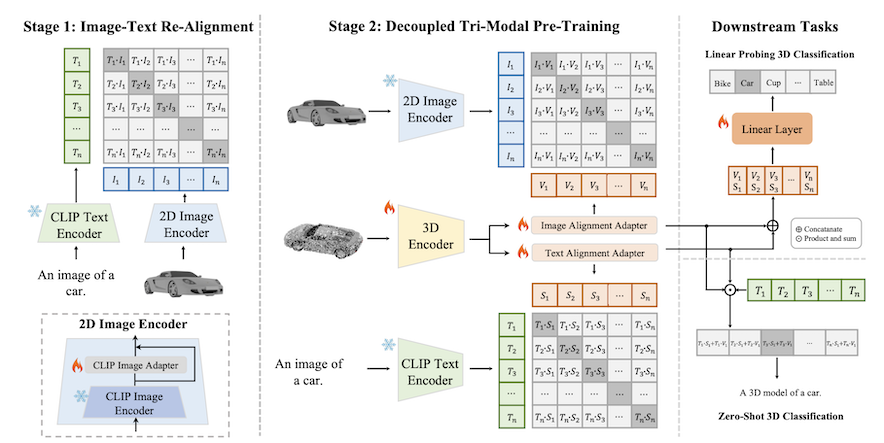

Zhihao Zhang*, Shengcao Cao*, Yu-Xiong Wang CVPR , 2024 Project Page / Code / arXiv Introduce TriAdapter Multi-Modal Learning (TAMM), a novel two-stage learning approach based on three synergistic adapters to different modalities in the pre-training. |

|

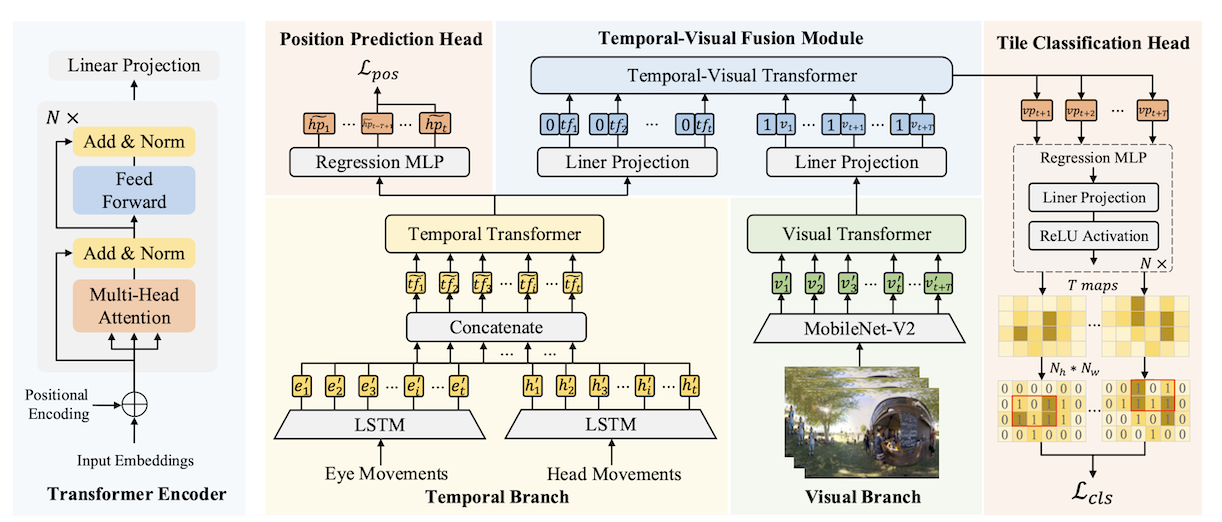

Zhihao Zhang*, Yiwei Chen*, Weizhan Zhang, Caixia Yan, Qinghua Zheng, Qi Wang, Wangdu Chen ACM MM , 2023 Code / arXiv Propose a tile classification based viewport prediction method with Multi-modal Fusion Transformer to improve the robustness of viewport prediction. |

| (* means equal contribution) |